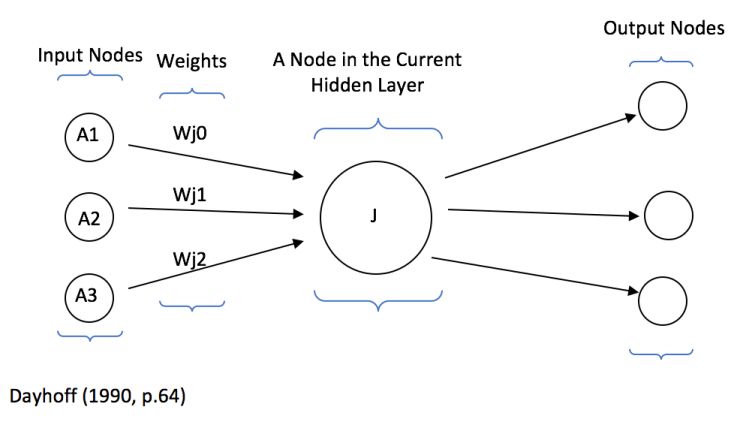

One of the most important aspects of a neural network and its ability to learn is forward propagation. Forward propagation can be seen as the way in which a neural network implements its decisions on how to reach the correct outcome, with the decision being made with the back-propagation step. In order to express propagation, one can visualise a node:

The steps of Forward propagation:

- The node receives input from the previous layer of nodes. If the node is within the input layer, the node will receive the inputs in the form of dataset values (each node within the input layer then corresponds to an entry within the input layer).

- The node then multiplies the inputs it has received by the nodes corresponding weights. This formulation is applied to every input node. All nodes from the previous layer. This can be formulated with the expression:

Note: The addition of a bias has been omitted from this example for simplicity.

- After the incoming value is computed, node j then applies an activation function to the output (this does not apply to the input layer as each node value only corresponds to the relative position from the dataset vector.

- The use of an activation function is an important step as it allows the neural network, depending on the function used, to compute an output that is non-linear. This non-linearity is an important factor as it allows the output to be more precise in determining if the node’s threshold has reached.

- This cycle is then repeated each layer of the network, before back propagation takes place.

Dayhoff, J. (1990) Neural Network Architectures. Boston: International Thomson Computer Press.